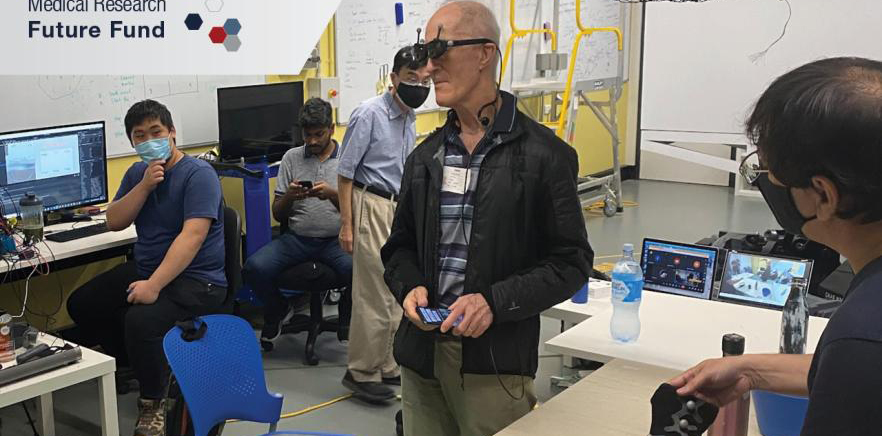

Sydney start-up ARIA Research is putting the smart specs through a clinical trial.

Sydney-based start-up ARIA Research has developed augmented reality glasses that allow people who are vision-impaired to navigate using spatial sound.

Objects are detected via embedded machine vision cameras and translated via AI into a soundscape like music, allowing users to experience the information provided by the glasses as an “enjoyable flow”, according to Robert Yearsley, CEO and co-founder of ARIA Research.

Training the AI to translate objects into a soundscape users can understand was an ongoing challenge for researchers, as was shrinking the technology to fit in the arms of the glasses.

“Our first prototype was a helmet with a 12kg box of electronics. That is a science experiment, not a product,” said Mr Yearsley.

“Now we are working on the form factor to fit all the technologies in the arms of the glasses and make them robust. That means fundamental research to come up with new methods for miniaturising the systems, which is super exciting.”

Employing people who are vision-impaired as subject matter experts had been crucial to the development of the technology at every stage, Mr Yearsley said.

“The subject matter experts told us we needed to position the cameras so they could ‘see’ edges they might fall off,” he said.

“They also need to ‘see’ hazards at head height that their cane won’t detect, such as tree branches.

“It’s hard to get a complete vision of what you want, but putting the end users in front of the engineers and scientists is the absolute key. They explicitly say what we need to do to solve problems.”

ARIA Research has received $2.3 million in funding from the Medical Research Future Fund through the MTPConnect programs BioMedTech Horizons and Clinical Translation and Commercialisation Medtech.