It’s time to put an end to the evidence-based medicine madness

Anish Koka recently wrote a great piece entitled “In Defense of Small Data” that was published on The Health Care Blog

While many doctors remain enamored with the promise of Big Data, or hold their breath in anticipation of the next mega clinical trial, Koka skillfully puts the vagaries of medical progress in their right perspective. More often than not, Koka notes, big changes come from astute observations by little guys with small data sets.

In times past, an alert clinician would make advances using her powers of observation, her five senses (as well as the common one) and, most importantly, her clinical judgment. She would produce a case series of her experiences, and others could try to replicate the findings and judge for themselves.

Today, this is no longer the case. We live in the era of “evidence-based medicine,” or EBM, which began about 50 years ago. Reflecting on the scientific standards that the medical field has progressively imposed on itself over the last few decades, I can make out that demands for better scientific methodology have ratcheted up four levels:

Beginning in the late 1960s, and then throughout the 1970s, some began to call attention to the need for better statistical science in research publications. Chief among those emphasising this problem was Alvan Feinstein. Another important figure was Stanton Glantz, and there were others as well. See, for example, this editorial in Circulationin 1980.

Then, in the late 1980s and early 1990s the movement that coined the term EBM emerged, notably from McMaster University in Hamilton, Ontario. Names associated with that movement include David Sackett, Gordon Guyatt, Salim Yusuf, and others. Beyond the proper use of statistics, they demanded that clinical studies be designed properly, and they anointed the randomised double-blind clinical trial as the supreme purveyor of medical evidence. Others, like Ian Chalmers and the folks at the Cochrane Collaboration developed methods of “meta-analysis” whereby different trials and studies on a particular question could be analyzed in aggregate.

The mid-to-late 1990s were the heydays of EBM. I remember the excitement in 1992 when the Evidence-Based Medicine Working Group published its teaching series in JAMA on how to properly interpret the medical literature. I was a senior in medical school at that time, and that series of papers quickly became the go-to reference for self-respecting doctors who wanted to demonstrate that they weren’t taking the conclusions of medical publications at face value. We would be able to judge for ourselves the validity of any new claim! And we began to systematically doubt old claims that were not backed by sufficient evidence. (The autobiography of EBM was recently sketched in JAMA here).

By the late 1990s, however, it became clear that EBM was having two major effects, and the promotion of clinical judgment was not one of them. On the one hand, EBM gave rise to “guideline medicine” and cookbook recommendations that would soon provide insurers and government agencies a method to gauge “quality of care” on a large scale and to tie performance to that quality. On the other hand, the methodology also played into the hands of large pharmaceutical companies who, through the implementation of large clinical trials, could now identify small effects that physicians would feel compelled to apply to large populations of patients.

The former effect was not so problematic for the promoters of EBM who, by and large, were made up of “systems experts” and outcomes researchers for whom the “rational” provision of care by way of clinical guidelines and “appropriate use criteria” seemed like a great boon.

But the latter effect, i.e., the benefits the pharmaceutical and medical device industry reaped through the use of the mega-trial, was a tough pill to swallow. Many in the EBM movement were not particularly thrilled to see their pet methodology be at the service of major corporations, but they were hard-pressed to be able to criticise them on that basis.

An opportunity to correct that trend came when the Vioxx scandal brought to light the fact that too cozy a relationship between scientists and corporations could lead to tampering of the precious evidence. It would not be long before the next level of demand on clinical science would be made: in addition to being proficient in the use of statistics and in the designs of clinical studies, scientists would now also have to disclose any potential conflicts of interest, i.e., any financial relationship with a sponsoring corporation. Soon the sun would shine on the greed of doctors and scientists and, it was hoped, the truth would finally emerge victorious.

But even that has failed to satisfy the sceptics. After all, conflict of interest is undetectable and unavoidable. And the emphasis on conflicts of interest seems to have poisoned the atmosphere. Witness any debate between scientists on Twitter, and you will experience a new version of Godwin’s law:

The latest remedy concocted to deal with the vexing problem of scientific truth is data sharing, which I mentioned in a recent post. Soon, scientists may need to make all data forms, correspondence, and even their inner reflections, available to third parties on demand, so that, you know, we can finally be sure that the results are “true.”

I predict that statistics, optimal study designs, conflict of interest disclosures, and data sharing will never satisfy our yearnings for clinical truth. In recent days, I have been particularly struck by the epistemiological crisis in which we seem to find ourselves, despite the fact that we have access to huge data sets of information. There is no longer any medical fact or truth that can be considered reliable anymore. Scepticism is rampant and everything is in doubt, which is strange since there has never been more attention paid to being meticulous about science and methods.

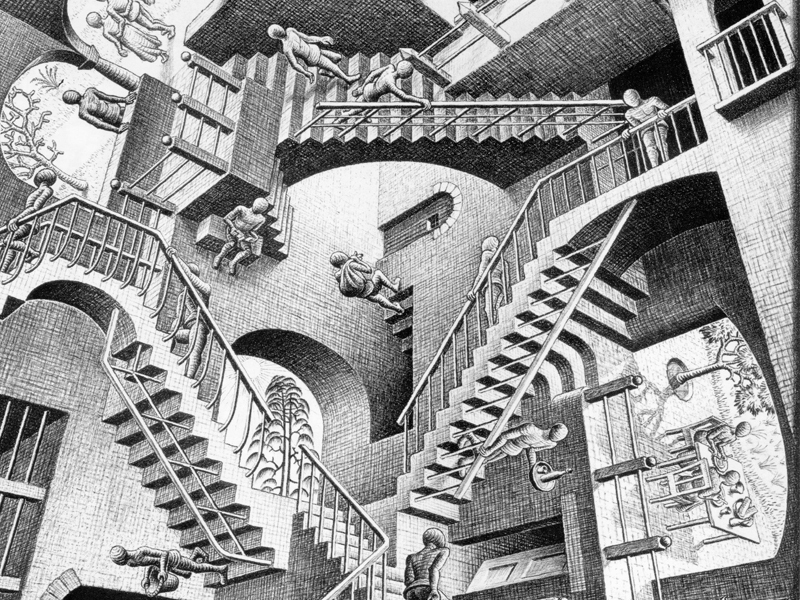

It’s like a paradox: the more we insist on scientific reliability, the less certain our knowledge seems to become. As I mentioned before, I believe this paradox arises in part because we are bent on applying to medicine quantitative methods that are more suited for the study of falling stones and quarks than for the proper understanding of human beings. Randomised trials provide us with general rules, but the business of medicine is to particularise. When the time comes to apply our knowledge at the bedside, the evolution of EBM seems like a devolution against sound clinical judgment.

Also, quantitative methods have taken prominence because medicine has ceased to be a private affair between doctors and patients, but has turned instead into a public health endeavour. Yet patients still expect us to treat them as individuals, and most doctors hope to practice on a human scale in the context of small communities. The focus on big data and big methods is a major distraction, and it has yet to convince me that we are better doctors as a result of it.

In his magnificent essay, Koka notes:

“I realise it has become dangerous to use one’s clinical experience to inform one’s views….Forcing all progress through the funnel of a million-strong clinical trial is a bar too high, a bar that protects us from medicine itself. No one benefits from that.”

I agree with my colleague Koka. It’s time to return to the small data set. It’s time to reclaim our ability to think, judge and reason for ourselves. It’s time to put an end to the EBM madness.

Michel Accad practices cardiology in San Francisco and blogs at alertandoriented.com where this post originally appeared.